There was a great demo showing robots working in a distribution centre at @OcadoTechnology using AI. The keynote raised the question of old world relational databases. Do we still need old world relational databases? They say not and MySQL, PostgreSQL and MariaDB are why customer are moving to Open database engines.

The Forces and market trends that are causing adoption of new services

Amazon has

various database offerings.

- Amazon Aurora (a MySQL-compatible relation database engine that combines the speed and availability of high-end commercial databases)

- Amazon Relational Database Service (covers MySQL, Oracle, SQL Server, or PostgreSQL database in the cloud)

- Amazon DynamoDB (a highly scalable, fully managed NoSQL database service)

- Amazon Redshift (a fully managed, easily scalable petabyte-scale data warehouse service that works with your existing business intelligence tools)

- Amazon ElastiCache (a web service that makes it easy to deploy, operate, and scale an in-memory cache in the cloud.)

- AWS Database Migration Service

Migrating Data to and from AWS

When migrating databases to AWS you can backup your SQLServer database and restore the backup file to AWS RDS or EC2. The migration steps

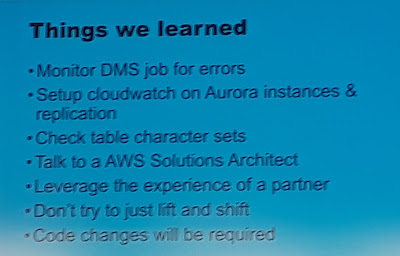

There were various things to learn from the migration.

The Amazon Relational Database Service (Amazon RDS) covers

Amazon Aurora keeps 6 copies of the data, two copies in each availability zone to protect against availability zone failure.

There are

various Analytics services such as

- Amazon Athena (is an interactive query service to analyze data in Amazon S3 using standard SQL. Athena is serverless)

- AWS Data Pipeline (is a web service that helps you reliably process and move data between different AWS compute and storage services, as well as on-premise data sources, at specified intervals)

- AWS Glue (is a fully managed ETL service that makes it easy to move data between your data stores)

- Amazon Kinesis (to collect, process, and analyse real-time streaming data)

Artificial Intelligence service offerings are:

- Amazon Lex

- Amazon Polly

- Amazon Rekognition

- Amazon Machine Learning

- AWS Deep Learning AMIs

- Apache MXNet on AWS

AWS was called the centre of gravity for AI.

Machine Learning capabilities explained